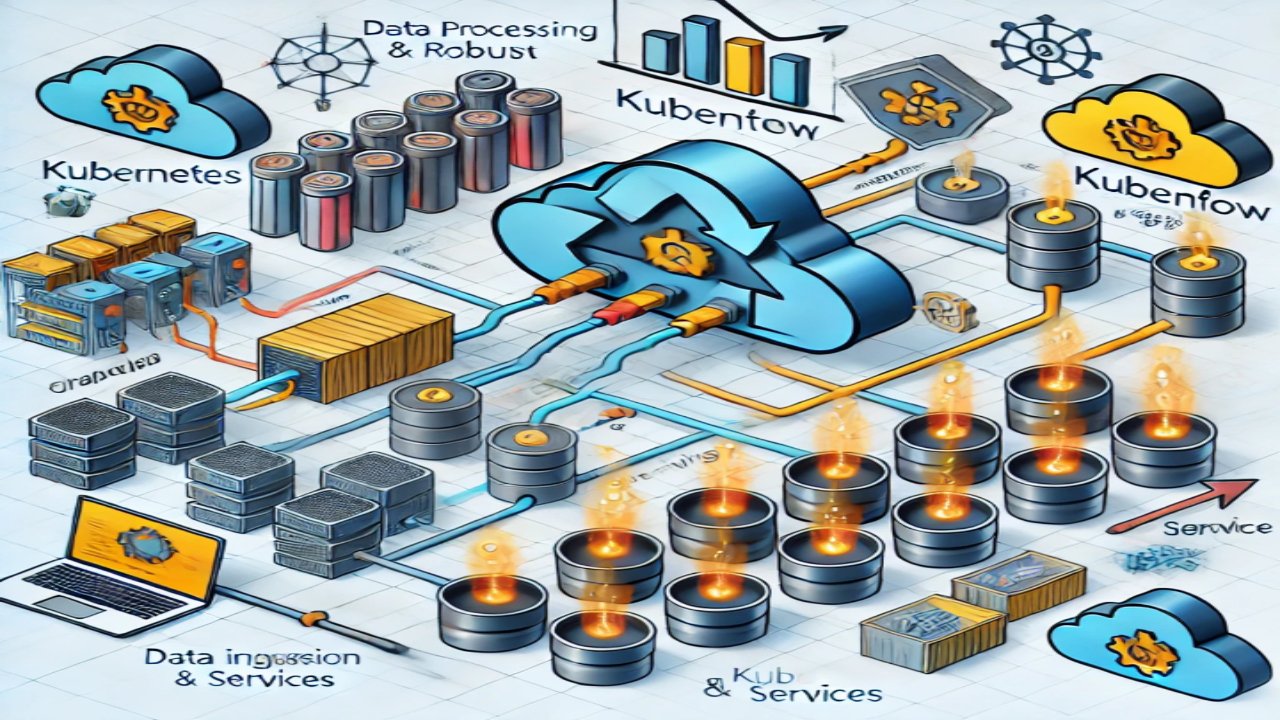

In today’s data-driven world, machine learning models have become an integral part of many businesses. However, deploying and managing these models at scale can be a challenging task. In this technical blog, we will explore how to build a scalable and robust ML model deployment pipeline using Kubernetes and Kubeflow. These two powerful tools are widely used in the MLOps (Machine Learning Operations) space.

Setting up a Kubernetes Cluster

Setting up a Kubernetes cluster is the foundational step in deploying machine learning models at scale. It involves orchestrating containers across multiple nodes, enabling seamless scaling and management of applications. Kubernetes simplifies container orchestration, making it an ideal choice for ML model deployment.

Introduction to Kubernetes

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a robust framework for managing containerized workloads, including machine learning models, in a highly efficient and scalable manner.

Setting up a Cluster Using a Managed Kubernetes Service

Managed Kubernetes services like Google Kubernetes Engine (GKE), AWS Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS) offer a hassle-free way to set up and manage Kubernetes clusters. These services abstract away much of the underlying infrastructure complexity, allowing organizations to focus on deploying and running their ML models without worrying about cluster maintenance.

Introduction to Kubeflow

Kubeflow is an open-source platform designed to simplify and accelerate the development, deployment, and management of machine learning workflows on Kubernetes. It offers a comprehensive set of tools and components that streamline the MLOps process.

What is Kubeflow?

Kubeflow is a machine learning toolkit built for Kubernetes, enabling organizations to orchestrate, deploy, and manage ML workloads with ease. It promotes the best practices of MLOps by providing a unified platform for data scientists and engineers to collaborate seamlessly.

Key components of Kubeflow

Pipelines

Kubeflow Pipelines allow you to define, orchestrate, and automate your ML workflows as code. It provides a visual interface for building complex pipelines, making it easier to manage the end-to-end ML process.

Katib

Katib is Kubeflow’s hyperparameter tuning component. It automates the search for optimal hyperparameters, improving model performance and efficiency by conducting experiments in parallel.

Training Operators

Kubeflow Training Operators simplifies model training and scaling. They help manage distributed training across multiple nodes or GPUs, making it easier to scale your ML workloads.

Serving

Kubeflow Serving facilitates model deployment and scaling in production. It supports multiple serving frameworks and allows you to manage different versions of your models for A/B testing and gradual rollouts.

Preparing Your ML Model

Data Preprocessing and Feature Engineering

Data preprocessing is a critical step where we clean, transform, and prepare the raw data for modeling. It involves tasks like handling missing values, encoding categorical variables, and scaling features. Feature engineering goes beyond that, creating new features or modifying existing ones to improve model performance. Techniques may include one-hot encoding, normalization, or generating interaction features.

Model Training and Evaluation

Once the data is preprocessed, we train machine learning models on it. Model training involves selecting an appropriate algorithm, fitting it to the data, and optimizing its parameters. After training, evaluation is crucial to assess model performance. Common metrics include accuracy, precision, recall, and F1-score for classification models and RMSE, MAE, or R-squared for regression. Cross-validation helps ensure the model’s generalizability.

Building a Kubeflow Pipeline

Kubeflow pipelines are a powerful way to orchestrate and automate machine learning workflows. They enable you to define, schedule, and monitor complex ML tasks, making it easier to go from data preprocessing to model deployment in a structured and repeatable manner.

Defining a Kubeflow Pipeline with Python SDK

The Kubeflow Python SDK allows you to define your ML pipeline as code. You can specify the sequence of tasks, their inputs and outputs, and any custom logic needed to build a complete end-to-end ML workflow. This code-driven approach enhances collaboration and ensures reproducibility.

Orchestrating and Automating the ML Workflow

With Kubeflow pipelines, you can orchestrate every step of your ML workflow, from data ingestion and preprocessing to model training and evaluation. Automation ensures consistency, reduces manual effort, and allows easy scaling as your ML projects become more complex.

Versioning and Artifact Tracking

Effective versioning and artifact tracking are crucial for maintaining transparency and reproducibility in ML projects. Kubeflow provides mechanisms for tracking datasets, models, and code changes, allowing you to pinpoint the exact versions used in each step of your pipeline. This helps in debugging and auditing model performance over time.

Model Hyperparameter Tuning with Katib

Setting up hyperparameter tuning experiments

Hyperparameter tuning is a critical step in optimizing machine learning models. With Katib, you can easily set up experiments to explore different hyperparameter configurations. Define the range of values for parameters like learning rate, batch size, or the number of layers in your model. Katib will then run multiple trials, adjusting these hyperparameters automatically, and help you discover the best configuration for your model’s performance.

Monitoring and optimizing model performance

Monitoring model performance in real-time is essential for maintaining a high-quality ML system. Katib provides built-in metrics collection and visualization tools to track how your different hyperparameter configurations impact model performance. By continuously monitoring these metrics, you can identify trends and make informed decisions to optimize your model further. Katib’s automation helps streamline the process, making it easier to achieve better results with less manual effort.

Deploying Models with Kubeflow Serving

Model packaging and serving

To deploy models with Kubeflow Serving, you need to package your trained model into a containerized format, typically a Docker container. Kubeflow Serving provides a clean and consistent way to serve these models as Kubernetes deployments, making it easy to manage and scale your models in a production environment.

Scaling model deployments

Kubeflow Serving allows you to scale your model deployments horizontally effortlessly. By adjusting the number of replicas for your model serving deployments, you can handle increased workloads and ensure low-latency responses, making it suitable for serving machine learning models to multiple users.

A/B testing and canary releases

With Kubeflow Serving, you can perform A/B testing and canary releases by deploying multiple versions of your model simultaneously. This enables you to compare the performance of different model versions in a controlled manner. By directing a fraction of the traffic to new versions (canary releases) or splitting traffic between different models (A/B testing), you can make informed decisions about model improvements and updates.

Continuous Integration and Continuous Deployment (CI/CD)

- Continuous Integration (CI) is the practice of automatically integrating code changes into a shared repository, enabling frequent code testing and early detection of integration issues.

- Continuous Deployment (CD) extends CI by automating the deployment of successfully tested code changes to production or staging environments.

- CI/CD pipelines help ensure code quality, reduce manual errors, and accelerate the software development and deployment process.

- Kubeflow pipelines can be integrated into CI/CD workflows to automate machine learning model training, evaluation, and deployment processes.

- CI/CD for machine learning allows data scientists and engineers to collaborate seamlessly and deliver ML models to production faster and more reliably.

Automating Model Deployments with GitOps

- GitOps is a modern approach to managing infrastructure and application deployments using Git as the source of truth for declarative configurations.

- With GitOps, infrastructure changes, including ML model deployments, are version-controlled in Git repositories, enhancing traceability and collaboration.

- Automation tools like Argo CD or Flux continuously monitor Git repositories, ensuring the infrastructure matches the desired state defined in Git.

- Automating model deployments with GitOps ensures consistency, repeatability, and rollback capabilities, which are crucial for ML model productionization.

- GitOps simplifies the deployment process, making it easier for teams to manage and scale ML model deployments across Kubernetes clusters.

Monitoring model performance in production

Monitoring model performance in production is crucial to ensure that your machine learning models continue to deliver accurate and reliable results. Key metrics such as accuracy, latency, and resource utilization should be continuously tracked. Implementing tools like Prometheus and Grafana can help you collect and visualize these metrics, allowing you to detect issues and optimize model performance in real-time.

Centralized logging and error tracking

Centralized logging and error tracking are essential components of a robust MLops strategy. By aggregating logs and errors from various components of your ML pipeline, you can quickly identify and troubleshoot issues. Tools like Elasticsearch, Logstash, and Kibana (ELK stack) or Fluentd can be used to centralize logs, making it easier to correlate events, investigate anomalies, and maintain a clear audit trail of your ML system’s behavior. This centralized approach streamlines debugging and enhances system reliability.

Securing your Kubernetes cluster

Securing your Kubernetes cluster is paramount. Begin by ensuring that only authorized personnel access the cluster management plane. Employ strong authentication methods like certificates or tokens. Regularly update and patch your cluster components to protect against known vulnerabilities. Additionally, leverage network security features like firewalls to limit external access.

Implementing RBAC and network policies

Role-Based Access Control (RBAC) helps restrict permissions to specific users or components, reducing the risk of unauthorized access. Network policies, on the other hand, define how pods communicate within the cluster. Implementing RBAC and network policies ensures that only authorized entities can interact with your applications.

Model security considerations

When deploying machine learning models, consider the security of the models themselves. Encrypt sensitive data used for inference and ensure that your model’s codebase is free from vulnerabilities. Regularly update dependencies and monitor for potential attacks, such as adversarial inputs or model inversion attacks. Finally, maintain a robust audit trail of model interactions for forensic analysis.

Conclusion

Recap of Key Takeaways

- In this blog, we explored the power of Kubernetes and Kubeflow for managing ML workflows.

- Building reusable Kubeflow pipelines and using Katib for hyperparameter tuning enhances efficiency.

- Kubeflow Serving simplifies model deployment and version control.CI/CD integration and monitoring tools are essential for robust MLOps.

- Security practices like RBAC and network policies ensure a secure MLOps environment.

Future Trends in ML Ops and Kubernetes

- Kubernetes is evolving with features tailored for ML, like Kubernetes Operators.

- More integration between ML frameworks and Kubernetes for seamless deployments.

- Enhanced ML-specific observability and explainability tools.Adoption of GitOps for end-to-end automation of MLOps pipelines.

- Increased focus on ethics and fairness in ML model deployments.

References and Further Reading

Certainly, here are some brief references and further reading recommendations for your technical blog on building a scalable ML model deployment pipeline with Kubernetes and Kubeflow:

1: Kubernetes Documentation

- Official Kubernetes documentation provides in-depth guides on cluster setup, networking, and security.

- Link: Kubernetes Documentation

2: Kubeflow Official Website

- The official Kubeflow website offers comprehensive documentation, tutorials, and resources for deploying and managing ML workflows.

- Link: Kubeflow Official Website

3: Kubeflow Pipelines GitHub Repository

- Access the Kubeflow Pipelines GitHub repository for code examples, samples, and contributions from the community.

- Link: Kubeflow Pipelines on GitHub

4: Kubeflow Serving GitHub Repository

- Explore the Kubeflow Serving GitHub repository for deploying and managing machine learning models with Kubeflow Serving.

- Link: Kubeflow Serving on GitHub

5: Monitoring and Logging with Kubernetes

- Understand Kubernetes-native monitoring and logging with Prometheus, Grafana, and Fluentd.

- Link: Kubernetes Monitoring and Logging

6: Kubernetes Security Best Practices

- Follow Kubernetes security best practices, including RBAC and network policies, to secure your cluster.

- Link: Kubernetes Security Best Practices

These references and further reading materials will provide readers with valuable resources to dive deeper into the topics discussed in this blog, ensuring they have the necessary information and guidance for implementing a robust ML deployment pipeline using Kubernetes and Kubeflow.

This blog post will provide a comprehensive guide for MLOps engineers and data scientists interested in building a robust machine learning deployment pipeline using Kubernetes and Kubeflow. It will include practical code examples and best practices to help readers get started with these powerful tools for managing their ML models effectively in production environments.

To build a strong ML model deployment pipeline utilizing Kubernetes and Kubeflow in your company, these hands-on code samples and top practices are here to assist you. It’s important to customize them to match your specific needs and circumstances while also adhering to security and best practices for ML deployments that are production-ready.